Seeing with infrared eyes: a brief history of infrared astronomy

|

| William Herschel, 1738 - 1822. Credit: Royal Astronomical Society |

It all began at the turn of the 19th century, when German-born musician turned English astronomer William Herschel was living in Slough, being the King's Astronomer in the country he had made his home since 1757. He was worried about climate change and, by a string of circumstances, this concern led him to the discovery of infrared radiation.

His concern with the climate was sparked by the discovery that some stars varied their brightness. This suggested that the Sun might also change and Herschel began to look for such variability.

Observing the Sun is a dangerous business, however. Just looking at it with your naked eye can cause permanent eye damage. So, to observe the fiery surface with a telescope, Herschel had to find some way to cut down the light and the heat. He began experimenting with coloured glass and made a curious discovery.

While red glass stopped most of the light from the Sun, too much heat got through. On the other hand, green glass cut out the heat but let through too much light. At the time it had been thought that all the colours carried equal amounts of heat, so Herschel investigated more. Using a prism and a set of thermometers Herschel measured the heat carried by each of the colours, and found that red light carried more heat than green, which in turn carried more heat than blue.

In a subsequent experiment, Herschel placed a thermometer beyond the red light to check he wasn't being fooled by the rising temperature of the surrounding room. He saw that the temperature beyond the red was even higher but a thermometer above the blue, stayed low. From this he concluded that there were invisible rays coming from the Sun that carried large quantities of heat. He talked about 'invisible rays that occasion heat', what we now call infrared radiation, and he published his discovery in the year 1800.

While measuring the heat of the Sun was possible with thermometers, other celestial objects gave out far too little to be easily measurable. In 1821, physicist Thomas Johann Seebeck discovered that a temperature difference between two different metals that are joined together gives rise to an electrical current. This led to the development of the thermocouple, a device that is capable of registering subtler differences in heat than a mercury thermometer.

In 1856, astronomer Charles Piazzi Smyth put the discovery to astronomical use when he used a thermocouple at an observatory he built on Guajara peak on Tenerife. He detected infrared radiation from the full Moon. Making the same observation at different altitudes on the climb up to the peak, he found that the higher he was, the more infrared he detected. This was the first evidence that something in our atmosphere absorbs infrared rays.

|

|

|

|

| From left to right: Thomas Johann Seebeck, Charles Piazzi Smyth, Laurence Parsons, and Samuel Pierpont Langley. | |||

Laurence Parsons, the 4th Earl of Rosse, measured lunar infrared signals at different phases of the Moon in 1870 but, in general, progress was slow. This was mainly because the early thermocouples were also limited in their sensitivity. As with so much of astronomy, the pace of scientific discovery is limited by the speed at which the technology develops.

The bolometer was invented in 1878 by American astronomer Samuel Pierpont Langley. It was sensitive to longer wavelengths of infrared than those discovered by Herschel and measured by the thermocouples. It led to the idea that the infrared could be split into two distinct parts: the near-infrared as measured by the thermocouples and the far-infrared as measured by the bolometers.

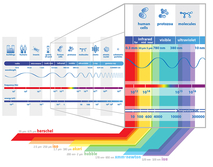

|

| The electromagnetic spectrum - IR to UV. Credit: ESA/AOES Medialab |

Now, astronomers recognise three regions: the near-infrared with wavelengths from about 1–5 micrometres; the mid-infrared extends from around 5 to 30 micrometres; and the far-infrared goes up to about 300 micrometres.

In the early 1900s, technology continued to develop. By joining thermocouples together, astronomers were able to create the more sensitive thermopiles. These were used by a small number of astronomers to begin the first measurements of stars and the planets of the Solar System at infrared wavelengths. Beyond simple catalogues of infrared brightness, the first scientific insight was also being derived from these measurements.

The diameters of the giant planets were calculated from the detections and the differences between the solar surface and the sunspots was investigated. Yet despite these clear advances, infrared astronomy failed to capture the mainstream thinking of astronomers. That changed after the Second World War.

As is so often the case, technology leaps forward during periods of warfare. In 1943, Germany began testing the Nacht Jager – an infrared device that could be used to see in the dark. Such night vision technology used lead sulphide as the detector. This material's electrical resistance changed when struck by infrared radiation, and its sensitivity increased by cooling it.

At the end of the war, such technology was developed for civilian use and by the 1950s astronomers were using it to detect celestial infrared sources. To make the detectors as sensitive as possible, they were placed in dewars, a type of thermos flask. The first coolant used was liquid nitrogen, which could lower the temperature to around 80K (approximately -190 ºC).

With such devices they measured infrared spectra for the planets. This allowed them to search for atmospheric constituents that are much harder or impossible to detect when using visible light. The results showed the presence of carbon dioxide in the atmospheres of Venus and Mars, and methane and ammonia in Jupiter's.

In subsequent years, liquid helium was used to drop the temperature even further, close to absolute zero. Liquid helium remains the coolant of choice even today.

Picking up on Piazzi Smyth's discovery that the atmosphere absorbs infrared, throughout the 1960s astronomers were building better and more autonomous detectors, then employing them on aircraft, balloons and sounding rockets.

Significantly, the results showed that there are sources of infrared in the sky that were not associated with previously known objects. Clearly, the development of infrared technology was giving scientists brand new eyes. This was not just a technology that would increase our knowledge of known objects but one that could reveal a wider, hidden cosmos.

|

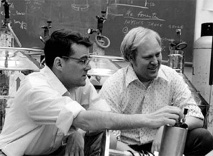

| Gerry Neugebauer and Eric Becklin. Credit: Caltech Archives |

An early, significant discovery for infrared astronomy came in 1967, in the form of the Becklin-Neugebauer object. When observed at 2.2 micrometres, it appears to be about the size of our whole Solar System, with a temperature of 700K (about 400 ºC). This object was discovered by California Institute of Technology astronomers Eric Becklin and Gerry Neugebauer while they were surveying the Orion Molecular Cloud complex.

Becklin and Neugebauer suggested that this was a dusty cocoon surrounding a newborn star, some dozen or more times the mass of the Sun. The discovery enhanced the worth of infrared astronomy because it proved that these wavelengths could reveal new classes of celestial object that were unobservable in the optical region of the spectrum. Until this point, many astronomers had assumed that surveys at 2.2 micrometres would just see the infrared light emitted by already visible stars. Yet, by proving the ability to peer into dark, dusty places from which light cannot escape, infrared showed that it was essential to the study of forming stars that would otherwise have been hidden from view.

By the end of the 1960s and throughout the 70s, several astronomical observatories around the world established telescopes dedicated to infrared observations. Finally, the discipline entered the mainstream of astronomy and the discoveries began in earnest.

The study of galaxies was given a large boost. The so-called active galaxies – those with feeding black holes at their centres – were shown to be extremely bright at infrared wavelengths. Other, apparently quiescent galaxies, were also highly luminous in these invisible rays. These were interpreted as being galaxies in which extremely large quantities of star formation were taking place and they were dubbed starburst galaxies.

In these years, however, astronomers faced a trade-off. Ground-based observatories allowed long-duration observations but at a restricted number of infrared wavelengths. In studying the way in which the atmosphere absorbs infrared, astronomers had shown that some wavelengths are absorbed much more strongly than others. This meant that even at the mountain-top observatories, certain wavelengths were impossible to see. To capture them, astronomers had to make do with much more fleeting observations from balloons or sounding rockets.

|

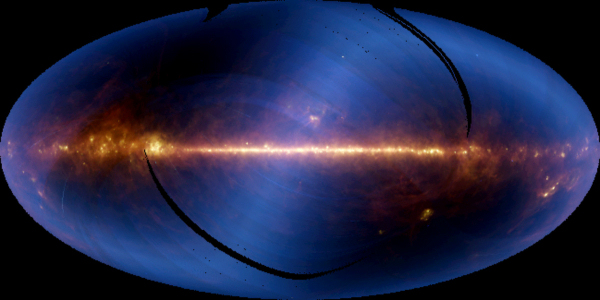

| Infrared all-sky survey by IRAS. Credit: NASA/JPL-Caltech |

To get long duration observations of all the wavelengths, the answer was clearly to deploy infrared satellites in orbit. The 1970s and early 80s were dedicated to this goal, which culminated in 1983 with the launch of IRAS (Infrared Astronomical Satellite). This joint mission between the US, the Netherlands and the UK lasted for 10 months. In that time, it mapped 96% of the sky, detecting more than a quarter of a million infrared sources at infrared wavelengths of 12, 25, 60 and 100 micrometres.

An estimated 75,000 of the sources are infrared dominated starburst galaxies, showing how busily the Universe is creating stars. IRAS also showed us the centre of our own Galaxy, the Milky Way, in infrared radiation for the first time. But perhaps the most intriguing discovery came from the star Vega.

Easily visible in the night sky, Vega is a bright young star that burns with a bluish-white colour. Intriguingly IRAS showed that it was emitting more infrared than expected. A handful of other stars also showed such ‘infrared excesses’, among them was a southern hemisphere star called Beta Pictoris.

A year after the discovery, astronomers Bradford Smith and Richard Terrile were working at the Las Campanas observatory in Chile, and went looking for the source of the infrared excess. Successfully blotting out the light from the star they saw the ghostly image of a dust disc surrounding the star. The infrared light was being radiated by cool dust that was in orbit around the star. The dust itself is being continuously created by on-going collisions between rocky objects which are at least the size of planetesimals but could be larger.

IRAS opened the way for a family of infrared satellites that continues to the present day. It was followed by ESA's ISO (launched in 1995), NASA's Spitzer (launched in 2003), and JAXA's AKARI (launched in 2006). Then, on 14 May 2009, ESA's Herschel was launched.

|

|

|

|

||

|

Clockwise from lower left: AKARI, Spitzer, IRAS, ISO (in the cleanroom), Herschel (in the cleanroom), and Herschel being launched. |

With a telescope 3.5 metres in diameter, Herschel contained the largest single mirror ever made for a space mission. Its studies have fully revealed how important the infrared wavelengths are to our understanding of the Universe.

Thanks to Herschel, it is now clear that stars are far more than just beacons of light. They are engines of change and drive the appearance of their host galaxies. Sometimes their fierce radiation prompts more stars to form, at other times it stops the process in its tracks.

In its almost 4 years of observations, Herschel revealed a remarkable story of star formation and traced it from the nearest regions of the Galaxy to the most distant realms of space. This complex interplay has shown that we cannot uncover the full history of the Universe without studying the formation of stars in detail.

|

|

|

|

| Herschel unlocked the secrets of star formation, uncovered the cosmic water trail, chronicled galaxy evolution, and left an important legacy. | |||

So, now the space agencies are preparing the next chapter together.

|

| Artist's impression of the James Webb Space Telescope. Credit: Northrop Grumman |

The James Webb Space Telescope (JWST) is widely touted as the successor to the Hubble Space Telescope. It is designed to see into the very distant Universe, where the expansion of the Universe means that the ultraviolet and visible light has been stretched into the near- and mid- infrared and, for this reason, JWST has been built to work at these wavelengths. It is almost as big as a Boeing 737 airliner, and will journey more than a million and a half kilometres from Earth to carry out its mission in an orbit similar to the one that Herschel used. A sunshield will keep the instruments and telescope cool to increase its sensitivity.

When operational, JWST will continue to open our eyes to the wonders of the infrared Universe. It will investigate star and planet formation, from the appearance of the first stars and galaxies in the Universe to those forming around us today. It will look for the building blocks of life in deep space and detect the atmospheres on planets around other stars.

Yet if the history of infrared astronomy teaches us anything, it is that the most exciting discoveries are not the ones we expect or hope for but the unexpected ones – the ones that come completely out of the blue. Or perhaps more appropriately in this case, out of the infrared.